PySpark Project- End to End Real Time Project Implementation

Loại khoá học: Data Science

Implement PySpark Real Time Project. Learn Spark Coding Framework. Transform yourself into Experienced PySpark Developer

Mô tả

End to End PySpark Real Time Project Implementation.

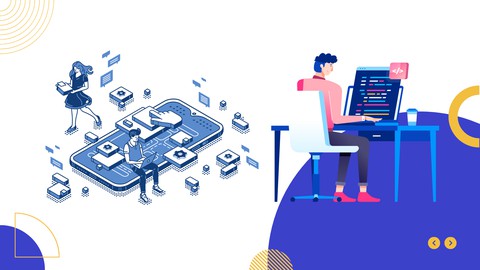

Projects uses all the latest technologies - Spark, Python, PyCharm, HDFS, YARN, Google Cloud, AWS, Azure, Hive, PostgreSQL.

Learn a pyspark coding framework, how to structure the code following industry standard best practices.

Install a single Node Cluster at Google Cloud and integrate the cluster with Spark.

install Spark as a Standalone in Windows.

Integrate Spark with a Pycharm IDE.

Includes a Detailed HDFS Course.

Includes a Python Crash Course.

Understand the business Model and project flow of a USA Healthcare project.

Create a data pipeline starting with data ingestion, data preprocessing, data transform, data storage ,data persist and finally data transfer.

Learn how to add a Robust Logging configuration in PySpark Project.

Learn how to add an error handling mechanism in PySpark Project.

Learn how to transfer files to AWS S3.

Learn how to transfer files to Azure Blobs.

This project is developed in such a way that it can be run automated.

Learn how to add an error handling mechanism in PySpark Project.

Learn how to persist data in Apache Hive for future use and audit.

Learn how to persist data in PostgreSQL for future use and audit.

Full Integration Test.

Unit Test.

Bạn sẽ học được gì

End to End PySpark Real Time Project Implementation.

Projects uses all the latest technologies - Spark, Python, PyCharm, HDFS, YARN, Google Cloud, AWS, Azure, Hive, PostgreSQL

Learn a pyspark coding framework, how to structure the code following industry standard best practices.

Install a single Node Cluster at Google Cloud and integrate the cluster with Spark.

install Spark as a Standalone in Windows.

Integrate Spark with a Pycharm IDE.

Includes a Detailed HDFS Course.

Includes a Python Crash Course.

Understand the business Model and project flow of a USA Healthcare project.

Create a data pipeline starting with data ingestion, data preprocessing, data transform, data storage ,data persist and finally data transfer.

Learn how to add a Robust Logging configuration in PySpark Project.

Learn how to add an error handling mechanism in PySpark Project.

Learn how to transfer files to S3 and Azure Blobs.

Learn how to persist data in Hive and PostgreSQL for future use and audit (Will be added shortly)

Yêu cầu

- Basic Knowledge on PySpark. You may brush up your knowledge from my another course 'Complete PySpark Developer Course".

- Basic Knowledge on HDFS (A detailed HDFS course is included in this course)

- Basic Knowledge on Python (A Python Crash course is included in this course)

Nội dung khoá học

Viết Bình Luận

Khoá học liên quan

Đăng ký get khoá học Udemy - Unica - Gitiho giá chỉ 50k!

Get khoá học giá rẻ ngay trước khi bị fix.

Đánh giá của học viên

Bình luận khách hàng