PyTorch for Deep Learning Bootcamp

Loại khoá học: Data Science

Learn PyTorch. Become a Deep Learning Engineer. Get Hired.

Mô tả

What is PyTorch and why should I learn it?

PyTorch is a machine learning and deep learning framework written in Python.

PyTorch enables you to craft new and use existing state-of-the-art deep learning algorithms like neural networks powering much of today’s Artificial Intelligence (AI) applications.

Plus it's so hot right now, so there's lots of jobs available!

PyTorch is used by companies like:

Tesla to build the computer vision systems for their self-driving cars

Meta to power the curation and understanding systems for their content timelines

Apple to create computationally enhanced photography.

Want to know what's even cooler?

Much of the latest machine learning research is done and published using PyTorch code so knowing how it works means you’ll be at the cutting edge of this highly in-demand field.

And you'll be learning PyTorch in good company.

Graduates of Zero To Mastery are now working at Google, Tesla, Amazon, Apple, IBM, Uber, Meta, Shopify + other top tech companies at the forefront of machine learning and deep learning.

This can be you.

By enrolling today, you’ll also get to join our exclusive live online community classroom to learn alongside thousands of students, alumni, mentors, TAs and Instructors.

Most importantly, you will be learning PyTorch from a professional machine learning engineer, with real-world experience, and who is one of the best teachers around!

What will this PyTorch course be like?

This PyTorch course is very hands-on and project based. You won't just be staring at your screen. We'll leave that for other PyTorch tutorials and courses.

In this course you'll actually be:

Running experiments

Completing exercises to test your skills

Building real-world deep learning models and projects to mimic real life scenarios

By the end of it all, you'll have the skillset needed to identify and develop modern deep learning solutions that Big Tech companies encounter.

⚠ Fair warning: this course is very comprehensive. But don't be intimidated, Daniel will teach you everything from scratch and step-by-step!

Here's what you'll learn in this PyTorch course:

1. PyTorch Fundamentals — We start with the barebone fundamentals, so even if you're a beginner you'll get up to speed.

In machine learning, data gets represented as a tensor (a collection of numbers). Learning how to craft tensors with PyTorch is paramount to building machine learning algorithms. In PyTorch Fundamentals we cover the PyTorch tensor datatype in-depth.

2. PyTorch Workflow — Okay, you’ve got the fundamentals down, and you've made some tensors to represent data, but what now?

With PyTorch Workflow you’ll learn the steps to go from data -> tensors -> trained neural network model. You’ll see and use these steps wherever you encounter PyTorch code as well as for the rest of the course.

3. PyTorch Neural Network Classification — Classification is one of the most common machine learning problems.

Is something one thing or another?

Is an email spam or not spam?

Is credit card transaction fraud or not fraud?

With PyTorch Neural Network Classification you’ll learn how to code a neural network classification model using PyTorch so that you can classify things and answer these questions.

4. PyTorch Computer Vision — Neural networks have changed the game of computer vision forever. And now PyTorch drives many of the latest advancements in computer vision algorithms.

For example, Tesla use PyTorch to build the computer vision algorithms for their self-driving software.

With PyTorch Computer Vision you’ll build a PyTorch neural network capable of seeing patterns in images of and classifying them into different categories.

5. PyTorch Custom Datasets — The magic of machine learning is building algorithms to find patterns in your own custom data. There are plenty of existing datasets out there, but how do you load your own custom dataset into PyTorch?

This is exactly what you'll learn with the PyTorch Custom Datasets section of this course.

You’ll learn how to load an image dataset for FoodVision Mini: a PyTorch computer vision model capable of classifying images of pizza, steak and sushi (am I making you hungry to learn yet?!).

We’ll be building upon FoodVision Mini for the rest of the course.

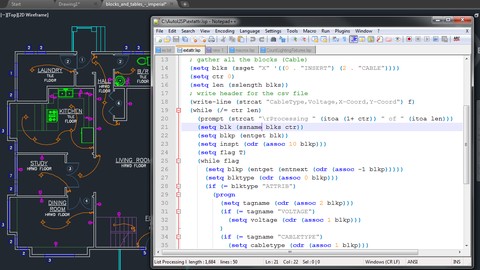

6. PyTorch Going Modular — The whole point of PyTorch is to be able to write Pythonic machine learning code.

There are two main tools for writing machine learning code with Python:

A Jupyter/Google Colab notebook (great for experimenting)

Python scripts (great for reproducibility and modularity)

In the PyTorch Going Modular section of this course, you’ll learn how to take your most useful Jupyter/Google Colab Notebook code and turn it reusable Python scripts. This is often how you’ll find PyTorch code shared in the wild.

7. PyTorch Transfer Learning — What if you could take what one model has learned and leverage it for your own problems? That’s what PyTorch Transfer Learning covers.

You’ll learn about the power of transfer learning and how it enables you to take a machine learning model trained on millions of images, modify it slightly, and enhance the performance of FoodVision Mini, saving you time and resources.

8. PyTorch Experiment Tracking — Now we're going to start cooking with heat by starting Part 1 of our Milestone Project of the course!

At this point you’ll have built plenty of PyTorch models. But how do you keep track of which model performs the best?

That’s where PyTorch Experiment Tracking comes in.

Following the machine learning practitioner’s motto of experiment, experiment, experiment! you’ll setup a system to keep track of various FoodVision Mini experiment results and then compare them to find the best.

9. PyTorch Paper Replicating — The field of machine learning advances quickly. New research papers get published every day. Being able to read and understand these papers takes time and practice.

So that’s what PyTorch Paper Replicating covers. You’ll learn how to go through a machine learning research paper and replicate it with PyTorch code.

At this point you'll also undertake Part 2 of our Milestone Project, where you’ll replicate the groundbreaking Vision Transformer architecture!

10. PyTorch Model Deployment — By this stage your FoodVision model will be performing quite well. But up until now, you’ve been the only one with access to it.

How do you get your PyTorch models in the hands of others?

That’s what PyTorch Model Deployment covers. In Part 3 of your Milestone Project, you’ll learn how to take the best performing FoodVision Mini model and deploy it to the web so other people can access it and try it out with their own food images.

What's the bottom line?

Machine learning's growth and adoption is exploding, and deep learning is how you take your machine learning knowledge to the next level. More and more job openings are looking for this specialized knowledge.

Companies like Tesla, Microsoft, OpenAI, Meta (Facebook + Instagram), Airbnb and many others are currently powered by PyTorch.

And this is the most comprehensive online bootcamp to learn PyTorch and kickstart your career as a Deep Learning Engineer.

So why wait? Advance your career and earn a higher salary by mastering PyTorch and adding deep learning to your toolkit?

Bạn sẽ học được gì

Everything from getting started with using PyTorch to building your own real-world models

Understand how to integrate Deep Learning into tools and applications

Build and deploy your own custom trained PyTorch neural network accessible to the public

Master deep learning and become a top candidate for recruiters seeking Deep Learning Engineers

The skills you need to become a Deep Learning Engineer and get hired with a chance of making US$100,000+ / year

Why PyTorch is a fantastic way to start working in machine learning

Create and utilize machine learning algorithms just like you would write a Python program

How to take data, build a ML algorithm to find patterns, and then use that algorithm as an AI to enhance your applications

To expand your Machine Learning and Deep Learning skills and toolkit

Yêu cầu

- A computer (Linux/Windows/Mac) with an internet connection is required

- Basic Python knowledge is required

- Previous Machine Learning knowledge is recommended, but not required (we provide sufficient supplementary resources to get you up to speed!)

Nội dung khoá học

Viết Bình Luận

Khoá học liên quan

Đăng ký get khoá học Udemy - Unica - Gitiho giá chỉ 50k!

Get khoá học giá rẻ ngay trước khi bị fix.

![[NEW] Full-Stack Java Development with Spring Boot 3 & React](/uploads/courses/udemy/5338984_4d3a_5.jpg)

Đánh giá của học viên

Bình luận khách hàng